Virtual reality simulator’s effectiveness on the spine procedure education for trainee: a randomized controlled trial

Article information

Abstract

Background

Since the onset of the coronavirus disease 2019 pandemic, virtual simulation has emerged as an alternative to traditional teaching methods as it can be employed within the recently established contact-minimizing guidelines. This prospective education study aimed to develop a virtual reality simulator for a lumbar transforaminal epidural block (LTFEB) and demonstrate its efficacy.

Methods

We developed a virtual reality simulator using patient image data processing, virtual X-ray generation, spatial registration, and virtual reality technology. For a realistic virtual environment, a procedure room, surgical table, C-arm, and monitor were created. Using the virtual C-arm, the X-ray images of the patient’s anatomy, the needle, and indicator were obtained in real-time. After the simulation, the trainees could receive feedback by adjusting the visibility of structures such as skin and bones. The training of LTFEB using the simulator was evaluated using 20 inexperienced trainees. The trainees’ procedural time, rating score, number of C-arm taken, and overall satisfaction were recorded as primary outcomes.

Results

The group using the simulator showed a higher global rating score (P = 0.014), reduced procedural time (P = 0.025), reduced number of C-arm uses (P = 0.001), and higher overall satisfaction score (P = 0.007).

Conclusions

We created an accessible and effective virtual reality simulator that can be used to teach inexperienced trainees LTFEB without radiation exposure. The results of this study indicate that the proposed simulator will prove to be a useful aid for teaching LTFEB.

Introduction

Traditionally, training using cadavers is often the preferred method of teaching spine-related procedures [1]. Practicing various techniques on cadavers provides an opportunity for inexperienced doctors to gain procedural experience without putting the patient at risk [2]. However, since at least one trainee, one radiographer, and one skilled person are required for training to be conducted on a cadaver, overcrowding cannot be avoided [3]. Therefore, cadaver training for procedures has dwindled under the currently implemented social distancing measures in the face of the coronavirus disease 2019 pandemic [4]. The pandemic has acted as a catalyst to facilitate a paradigm shift in traditional education methods; it is expected that traditional methods will eventually be replaced by alternatives such as online education, 3D printing, multimedia resources, and virtual/augmented reality [5,6].

Recently, virtual reality simulators (VRS) have been developed for application in various medical fields, such as pain modulation and rehabilitation, and for providing instruction during surgical procedures [7–9]. The advantage of VRS over cadaver training regarding its use in teaching C-arm guided spine procedures is that it enables trainees to avoid radiation exposure [7–12]. The advantage of VRS over traditional hands-on training is that it is minimizes for the learning curve of trainee [13,14].

To the best of our knowledge, VRS has not been developed previously for the training of C-arm-guided spine procedures. The development of such a simulator requires virtual reality (VR) technology and technologies such as medical image processing, virtual X-ray generation, and spatial registration technology.

In this study, we created this simulator for the educational purpose of spine procedures and hypothesized that compared with the commonly performed learning methods, the simulator would affect the learning efficacy, procedure time, and radiation exposures of inexperienced trainees. Therefore, we investigated the application of our VRS system for lumbar transforaminal epidural block (LTFEB) by assessing its efficacy in educating inexperienced trainees.

Materials and Methods

Simulator development

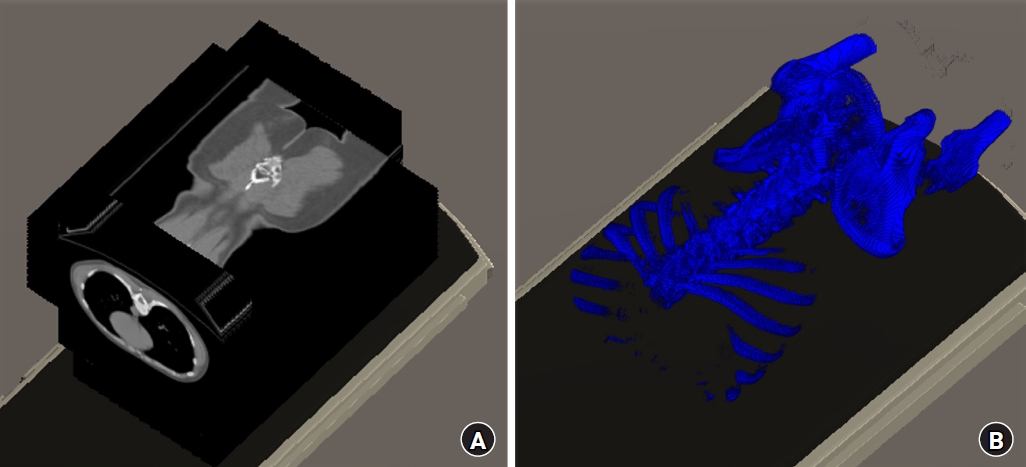

3D polygonized model preparation

An anonymized lumbar computerized tomography (CT) data file was obtained from an anonymized data server of the Korea University Anam Hospital in digital imaging and communication in medicine (DICOM) format. A three-dimensional (3D) polygonised model was generated using 3D SlicerⓇ (version 4.10.2; Harvard University, USA) to obtain an optimized 3D model of the spine. Regarding the vertebrae, 3D models were obtained separately for the five lumbar vertebrae, sacrum, and pelvic bones. In addition, the shapes of the intervertebral discs between the bones were obtained. The vertebrae had approximately 60,000 to 120,000 polygons per bone; however, there were concerns regarding performance degradation when used directly in the program. Therefore, we reduced the number of polygons to 5% for each bone using MeshMixerⓇ (version 3.5; Autodesk Inc., USA).

DICOM data manipulation

For DICOM file management in a virtual space, we built a program that could read and write DICOM files in UNITY softwareⓇ (version 4.2; Unity Technologies, USA) environment. We applied the Grassroots DICOM (GDCM) (version 3.0; http://gdcm.sourceforge.net) library in Visual Studio 2019 (version 16.10.3; Microsoft, USA) and UNITY using CMake (version 3.119.1; Kitware, Inc., USA) and Simplified Wrapper and Interface Generator (version 4.0.2; http://www.swig.org/).

After setting the GDCM, DICOM data were read as binary data and classified into tags such as image type (Tag# 0008, 0008), image orientation (Tag# 0020, 0037), instance number (Tag# 0020, 0013), image position (Tag# 0020, 0032), pixel spacing (Tag# 0028, 0030), windows center (Tag# 0028,1050), windows width (Tag# 0028, 1051) rescale intercept (Tag# 0028, 1052), rescale slope (Tag# 0028, 1053), and pixel data (Tag# 7FE0, 0010) and stored in separate series of arrays.

By adjusting the arrayed pixel data values with the rescale intercept and rescale slope values, the Hounsfield unit (HU) values of each image were obtained. We generated CT images by assembling the pixel colors through adjustment of the HU values obtained based on the windows center and windows width values. The four spatial coordinates of each image were stored and arranged in another array for subsequent virtual X-ray generation. The images were classified as axial, coronal, or sagittal using the image orientation value and then positioned in the virtual space by referencing the image position and pixel spacing values (Fig. 1A). A 3D bone-like image was obtained when only the HU values between 300 and 2,000 were visualized and the remaining values were applied with transparent materials (Fig. 1B).

DICOM data manipulation. (A) Axial, coronal, and sagittal computed tomography images positioned in the virtual space. (B) 3D bone-like images were obtained by visualizing only HU values between 300 and 2,000. DICOM: digital imaging and communications in medicine, HU: hounsfield unit, 3D: 3 dimensional.

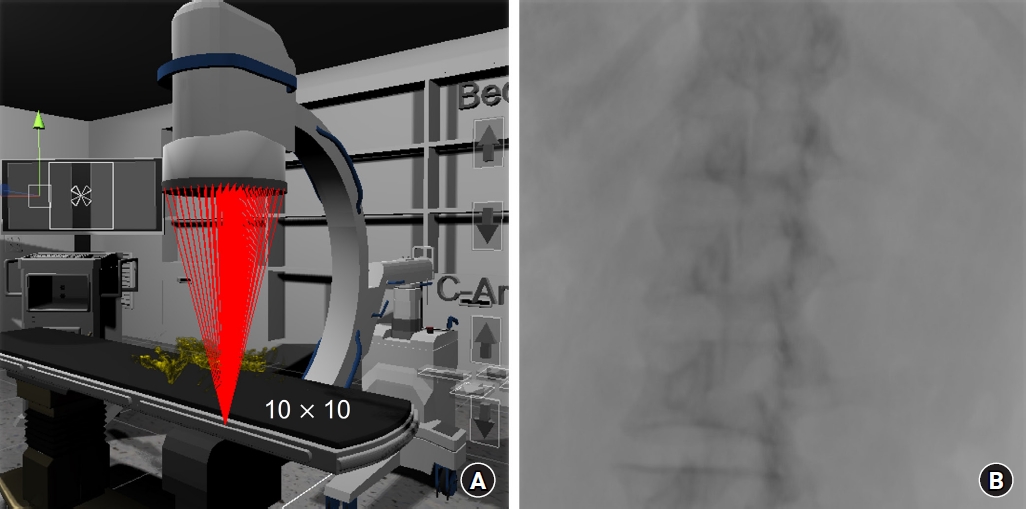

Virtual C-arm development

For a realistic simulation, a 3D C-arm polygon model was created. The C-shaped part, where the X-ray generator and detector were attached for height adjustment, was enabled subsequently for rotation, tilting, and vertical movement. The entire C-arm could execute parallel movement in all four directions. A generator that produced X-rays and a detector that detected them were placed in the virtual space. The detector points were set to be able to be divided by the desired resolution (x * y) between the corner points. The virtual X-rays were generated from the generator, to each divided point in the detector, as shown in Fig. 2A. Using the ratio of the dot product of each X-ray with the reference point of the obtained images, the point of the CT slice images where the collision occurred was calculated. After the corresponding HU values were obtained, the sum of the values was calculated. By dividing this value by the total number of CT slices, X-ray absorption was calculated, and virtual X-ray images were obtained by arranging them sequentially after converting the values into pixel values (Fig. 2B).

Simulation programming

The simulation program for the user movement and the C-arm procedure performance was designed to be most compatible with the Oculus Quest 2 (Meta Platforms, USA). However, the simulation was programmed to be compatible with other VR devices using the XR Interaction ToolkitⓇ (Unity, USA).

To ensure a realistic virtual environment, such as the place where actual procedures are performed, the procedure room, surgical table, C-arm, and monitor were created as 3D models and placed in an appropriate virtual space. The procedure room was set in such a way that the position of the patient could be changed, and the learner could perform the procedure on their desired side. The surgical table was divided into several parts to have functions such as height adjustment; the C-arm was also divided into several parts for rotation, tilt, height adjustment, and movement.

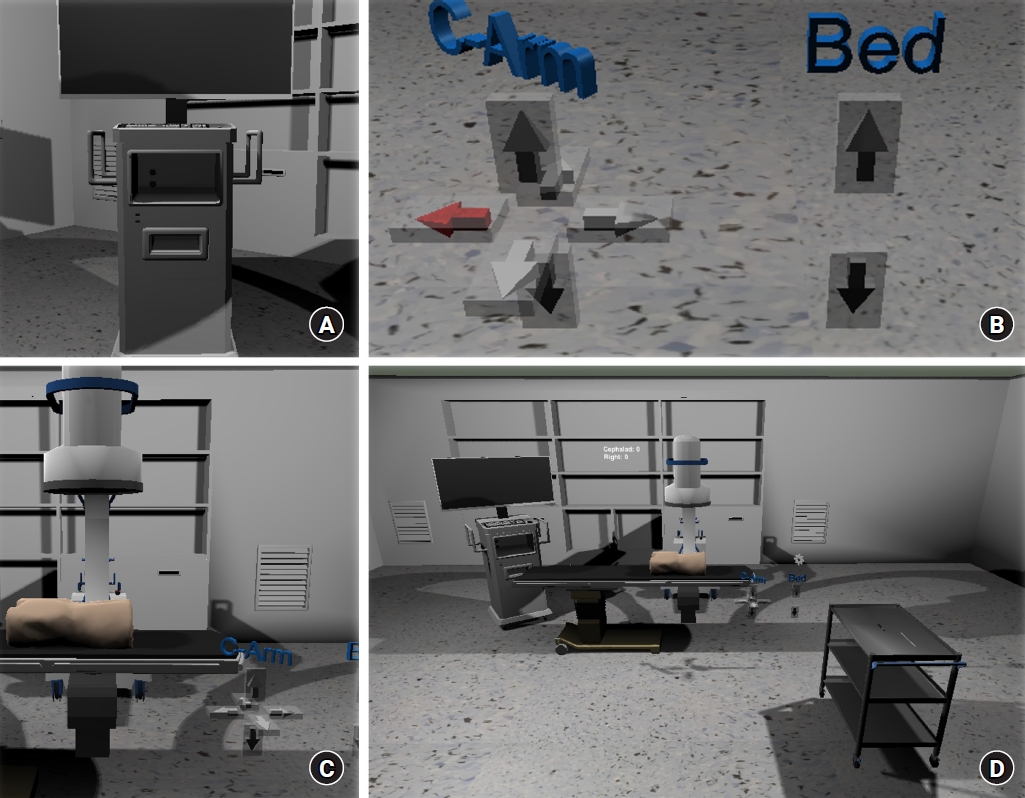

The monitor was designed to arrange the real-time X-ray image on the left and the stored image on the right (Fig. 3A). For the trainee’s convenience, two sets of virtual arrows were placed next to the bed such that the C-arm and bed could easily be adjusted (Fig. 3B). The arrows were placed next to the C-arm and the surgical table, where they could be easily accessed by the trainees (Fig. 3C). The table on which the needle and indicators were placed was also situated near the surgical table for the trainee’s convenience (Fig. 3D).

The virtual simulator room. (A) The monitor displaying the real-time X-ray image on the left and the stored image on the right. (B) Virtual arrows placed for the C-arm and surgical table movement. (C) The C-arm and surgical table with virtual arrows. (D) Overall view of the room.

The primary axis of the left controller was designed to easily control the rotation and tilt of the C-arm and programmed to shoot and save the virtual C-arm through the primary/secondary button. By contrast, the primary axis of the right controller was programmed to enable the learner to move freely, and the primary/secondary buttons were programmed to finely move forward and backward when the needle was inserted. The controller’s grip and trigger buttons were set to pick up/grab tools and execute functions.

To enhance the performance and convenience of the simulation, the previously obtained 3D polygonized model and a 3D bone-like image consisting of CT image slices (Figs. 4A and B) were placed in the same spatial position in the virtual simulation space (Figs. 4C and D). Subsequently, the CT image slices were made invisible (Figs. 4E and F). The 3D data of the body contour was also displayed in the correct spatial position (Fig. 4G).

The obtained 3D polygonized model and a 3D bone-like image consisting of CT image slices placed in the virtual simulation space. (A, B) 3D bone-like image obtained by visualizing only HU values between 300 and 2,000. (C, D) Spatial position matched 3D bone-like image and the 3D polygonized model. (E, F) 3D polygonized model. (G) 3D polygonized model with skin contour displayed. CT: computed tomography, HU: hounsfield unit, 3D: 3 dimensional.

An indicator and needle were created to simulate the procedure. The indicators were created as general bar-type and laser pointer-type (Fig. 5A). The laser pointer-type indicator allowed rays to be emitted; when it touched the skin, a red sphere appeared on the C-arm (Fig. 5B). After being presented with the red sphere, the position could be finely adjusted using virtual arrow buttons (Fig. 5C). When the general bar-shaped indicator, needle, and sphere collided with the X-rays, a value of 2,000 (based on the HU) was added to make it appear as if a metal material appeared on the C-arm (Fig. 5D).

The indicator and needle used for simulation of the procedure. (A) *General bar-type indicator, †Laser pointer-type indicator, ‡Needle for the procedure. (B) §A red sphere generated by the laser pointer-type indicator. (C) Virtual arrow buttons for the fine adjustment of the red sphere. (D) The indicators and needle appear as metallic material on the virtual X-ray.

Since it was difficult to support a mesh collider with more than 255 polygons in UNITY, a pelvis and body composed of less than 200 polygons each were separately constructed for efficiency, and collision with a needle- or laser pointer-type indicator was examined (Fig. 6A). When the tip of the needle made contact with the skin, a function to fine-tune the entry point of the needle through the virtual arrow button was implemented to enable precise manipulation (Fig. 6B). Once the needle started to enter the skin, it was not possible to adjust the insertion point. The needle could be advanced while finely adjusting the angle of the portion outside of the skin (Fig. 6C). The position of the needle tip was calculated as the part of the specific CT slice that was touched. When the HU value of that part was 300 or higher, it was judged that the needle had touched the bone and further advancement of the needle was restricted, and the trainee received feedback in the form of a vibration. The trainee was able to perform the procedure by checking the position of the spine and needle through the virtual C-arm. The results screen was configured such that the procedural outcomes could be verified after completing all the procedures (Fig. 6D and Supplementary Video 1). The VRS program could help trainees learn LTFEB by making the skin and bones invisible as well as allowing 3D visualization from multiple directions. This enabled the trainee to gain a clear understanding of the spatial positional relationship between the anatomy and the needle. They were trained to perform needle placement in the posterior to the vertebral body and just antero-lateral to the superior articular process.

Evaluation of the simulator

Participants

The study protocol was reviewed and approved by the clinical trial review board of the Yonsei University Gangnam Severance Hospital (3-2021-0237). This study was conducted in accordance with the ethical principles of the Helsinki Declaration 2013 and followed good clinical practice guidelines. The study was registered at ClinicalTrial.gov (NCT05029219). The results of 20 first- or second-year residents undergoing anesthesiology and pain medicine training with no experience in C-arm-guided spine procedures were included; those already familiar with the procedures were excluded from the study. All participants were randomly allocated to one of the two following groups: the VRS (Group V, n = 10) and control groups (Group C, n = 10). Before group allocation, envelopes containing the group information were numbered sequentially and sealed. The sealed envelopes were opened by an investigator unaware of the trainees’ assessments. Written consent was obtained from all participants.

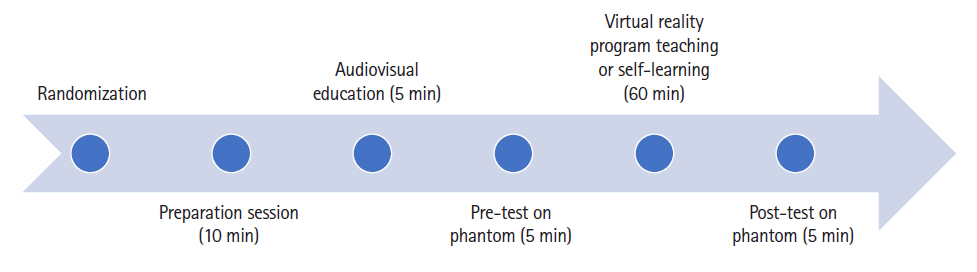

Curriculum and tests

The entire curriculum flow is summarized in Fig. 7. The following data were gathered for all participants: gender, age, years of residency training, and their previous experience with performing, observing, and assisting C-arm-guided spinal procedures. Subsequently, the participants watched a five-minute video of one of the authors performing LTFEB, single vertebra level, and unilateral procedure, under C-arm guidance. The audio accompanying the video was based on Furman’s [15] book, which described the fundamentals of the C-arm, the direction of the X-ray beam, the relative positions of the needle and beam during the procedure, the anatomical structure of the lumbar spine, and the method for performing the LTFEB.

After receiving a basic introduction, all participants were instructed to perform LTFEB (lumbar 4–5, left) on the phantom as a pre-test. The phantom was prepared according to a previous study [16]. The parameters included in each assessment were the checklist score, global rating score, procedure duration (seconds), number of C-arm taken, and satisfaction score (0–5). The participants in the VRS group (Group V) were individually trained for approximately one hour using a VR headset and an Oculus program. The participants in the control group (Group C) were provided with video material and Furman’s [15] book, which had been provided to all participants in advance to ensure that they could review it and study individually for approximately one hour. The post-test was conducted in the same way as the pre-test after all the training courses were completed. Each performance was assessed by physicians with expertise in the field of pain assessment who were unaware of the group assignment.

Outcome measures

The primary outcome was the change in the checklist score (post-test vs. pre-test) to evaluate the proficiency in performing C-arm-guided LTFEB, which has been previously utilized in several studies [16–18]. The checklist score [17], which validated the evaluation of LTFEB consisted of seven task-specific questions, and each question was scored as either ‘yes’ or ‘no’ (Appendix 1). The global rating scale [17,19] consisted of seven questions, and each question was scored out of a total score of five points (Appendix 2). Additionally, the procedural time and the number of C-arm shots were evaluated. The procedure time was defined as the time from when the first X-ray image was taken till the administration of the injectate was completed. For the participant satisfaction evaluation, questions were asked after all the courses were completed; the participants were asked to give an answer ranging from 1 to 5 (1 = unsatisfactory, 5 = satisfactory).

Statistical analysis

Based on a related previous study [17], the checklist score differed by five points between the control group participating in the didactic session and the low-fidelity group educated with a plastic spine covered by foam. Assuming that the median checklist score is the same as the mean checklist score, we conservatively predicted half of this difference as the average difference of our study. The value obtained by dividing the interquartile range by 1.35 was assumed to be the standard deviation. The number of samples was calculated based on the larger standard deviation of 1.5 among the test and control groups. When the power was 0.9 and the significance level was 0.05, the required target number of participants calculated by the G.powerⓇ (Version 3.1.9.7., Germany) was nine per group [20]. Considering a 10% dropout rate, 10 patients per group was deemed to be the required sample size. Continuous variables are presented as the median and interquartile range, depending on normality. Categorical demographic variables are presented as numbers. For continuous variables, comparisons of patient characteristics between the groups were made using the Mann–Whitney U test. Categorical demographic data were analyzed using Pearson’s chi-square test or Fisher’s exact test. The Mann–Whitney U test was performed to test the difference between the absolute values of the pre-test and post-test of the groups for primary and secondary outcomes and to test the difference between the groups and the degree of change of each outcome. To test the differences between before and after education within the group, the Wilcoxon signed-rank test was performed. Statistical significance was set at P < 0.05. All data were analyzed using SPSS Statistics for Windows (Version 24.0, IBM Corp., USA).

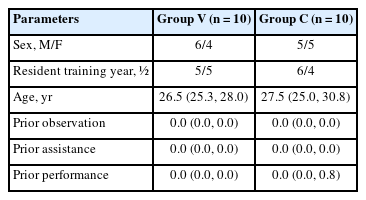

Results

Overall, 20 residents participated in the study. Table 1 presents their demographic data. Their previous experience with performing, observing, and assisting C-arm-guided spinal procedures was comparable. There were no significant differences between the two groups in any demographic parameters.

Fig. 8 shows the mean value of the checklist and global rating score. The mean post-test scores increased in both groups with respect to the pre-test scores. However, compared with group C, group V showed a more significant improvement in the global rating score.

Changes in the mean checklist and global rating scores in both groups. Group V indicated the group of participants who were educated with the VRS. VRS: virtual reality simulator. *indicates P < 0.05 within the group comparison based on the results of the Mann–Whitney U test, †indicates P < 0.05 between group comparison based on the results of the Wilcoxon signed-rank test.

Compared with the pre-test training score, the checklist score increased in both groups (group V, P = 0.004; group C, P = 0.041). There was no significant difference in the degree of change in the checklist score before and after training between the groups. However, compared with group C, in group V, the global rating score increased significantly after training compared with that before training (P = 0.014). In both groups, the global rating score increased after training compared with that before training (group V, P = 0.005; group C, P = 0.027). The time taken to complete the procedure (P = 0.025) and the total number of C-arm taken (P = 0.001) was significantly lower in group V than in group C after training. The procedure duration and number of X-ray images taken decreased at the post-test compared with those at the pre-test in all participants. The participants in group V showed a higher overall satisfaction score than those in group C (P = 0.007).

Discussion

In the present study, we developed a VRS for spine procedures and evaluated its effectiveness in training inexperienced trainees. To our knowledge, this simulator is the first VRS developed directly by a physician with long-term experience in applied pain medicine. The VRS group for LTFEB improved the global rating score compared with that of the commonly performed learning method. In addition, the procedure time and number of C-arm taken of the VRS group were shorter than those of the commonly performed learning group.

The generation of a virtual X-ray image has also been attempted in various fields [20–23]. However, we could not find any previous study that implemented a simulation using a C-arm. Since other studies are limited and present only one view, such as an anteroposterior [22,23] or lateral view [22], or an image according to a specific angle, the image generated by the C-arm is more relevant. Even if X-ray images are generated at a specific angle, the image changes greatly depending on the positional relationship between the generator, detector, and target objects, including the procedure instruments [21]. We could not find any previous simulations that properly reflected this context [22,23]. In addition, virtual X-ray images had to be provided in real-time to enable the simulation of the procedure in a virtual space. However, using DICOM data directly in the virtual space without optimization programming techniques is difficult and can limit some of its applications [24]. Although it could have been performed through a large-scale, long-term project using multiple technicians, it is usually difficult to secure a large budget for an educational endeavor. In addition, commercially produced software may result in increased production costs, which would limit access to trainees.

An open-source program designed for UNITY to freely read, write, and edit DICOM data in VR is currently unavailable; as such, we have created a program to visualize the DICOM data in a virtual space and directly manipulate it [24,25]. The program we developed can build a simulation for actual patients in a short time. Therefore, it can also be used in pre-procedure planning/simulation and act as a real-time intraoperative guide. In addition, it can be applied in research settings and facilitate simulations in various other medical fields.

Compared with other simulators, the VRS used in this study has the advantage of being developed by a doctor who performs and teaches the procedure [24,26]. Although there have been many advances in attempts to reproduce reality using VR, it remains a challenging task owing to limitations in the performance and pricing of equipment. It is difficult to reproduce actual tactile sensations or implement very fine movements. Therefore, to overcome these limitations and effectively use VR for education, users must be able to directly experience and repeat the process of modifying the method according to their needs. For example, in this simulator, training to understand 3D structures from 2D X-ray images is imperative. Therefore, to strengthen the purpose of and to reproduce the actual procedure, fine movement control should be implemented only for appropriate parts of the simulation, and vibration should be replaced with tactile feedback. In addition, a verification process is needed to provide support while maximizing learning effectiveness. Therefore, only a person familiar with the actual procedure could develop such a simulator.

Another significant strength of our simulator is that it avoids the risk of radiation exposure, unlike training using cadavers and phantoms [11,12,16]. Koh et al. [16] suggested that a spinal procedure could be taught effectively and inexpensively by creating a lumbar spine phantom using 3D printing. However, education using phantoms cannot avoid radiation exposure. Although Hashemi et al. [27] insisted that ultrasound-guided LTFEB was accurate and feasible in a clinical setting, it is not recommended by the 2021 American Society of Interventional Pain Physicians guidelines [28].

We expected that inexperienced residents learning LTFEB with our simulator could learn the procedure more efficiently than when training with an alternative. Accordingly, we found that the global rating score increased significantly, and there was a significant decrease in the procedure time and the number of C-arms in group V compared with those in group C. We attribute this result to the access the trainees had to the program and the fact that they could repeatedly refine their technique by practicing LTFEB through our simulator. If inexperienced trainees practice repeatedly, they will gradually become proficient in the procedure. The ability to check the 3D structure of the vertebra and confirm the relative position of the needle tip at the end of the procedure are features that cannot be replicated, even in a clinical setting with an actual patient.

The degree of change in the checklist score before and after education was not significantly different between the groups. Presumably, since the checklist score was a binary answer for each evaluation item, it was difficult to subdivide the proficiency level. In addition, several limitations within our simulator could be attributed to the fact that the checklist score for group V was not greatly improved compared with group C. We were unable to completely resolve the inherent limitations of VRS, such as dizziness and tactile reproducibility. In addition, the CT data from patients available for use was usually not high-density CT data but CT images of the spine. Therefore, it was difficult to achieve high resolution and image quality of the virtual X-rays, and image quality may have affected the efficiency of the learning process.

This study had several limitations. First, since the phantom was used to evaluate the proficiency of the procedure, there was inevitably a gap between the context and the clinical situation when performing the technique on an actual patient. Second, the study could not be blinded as we had to compare VRS with traditional educational methods. Therefore, our approach can be criticized for not comparing VRS and traditional methods under equal and unbiased conditions. Third, since the created VRS could only teach the procedure method, there was a limitation in teaching predictable side effects such as vessel or nerve injury. Fourth, only LTFEB was taught in this study. However, the created simulator can be used to simulate any other C-arm-guided procedures using CT data from relevant patients. Therefore, we aim to conduct further studies to evaluate the effectiveness of this simulator in learning other procedures.

Herein, we describe a new simulator that enables inexperienced trainees to effectively learn LTFEB without radiation exposure and confirm the educational efficacy of the simulator. The VRS for LTFEB improved the learning efficacy of inexperienced trainees compared with commonly performed learning methods. The VRS for various spinal procedures must be investigated as a remote-accessible alternative to educate early-career trainees.

Notes

Funding

This work was supported by the Korea University Research Grant (No. K2023041).

Conflicts of Interest

No potential conflict of interest relevant to this article was reported.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Author Contributions

Ji Yeong Kim (Data curation; Formal analysis; Investigation; Methodology; Project administration; Writing – original draft; Writing – review & editing)

Jong Seok Lee (Conceptualization; Data curation; Formal analysis; Investigation; Methodology)

Jae Hee Lee (Investigation; Methodology; Project administration; Resources)

Yoon Sun Park (Resources; Validation; Visualization; Writing – original draft; Writing – review & editing)

Jaein Cho (Data curation; Investigation; Methodology; Resources; Software)

Jae Chul Koh (Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Project administration; Resources; Software; Supervision; Validation; Visualization; Writing – original draft; Writing – review & editing)

Supplementary Material

The virtual C-arm, patient X-ray images, needle, and indicator were all operable in real-time.